💻 What Launched today at Full Stack Week

Presented by: Jon Kuperman, Kristian Freeman, Ashcon Partovi, Kabir Sikand

Originally aired on November 16, 2021 @ 3:30 PM - 4:00 PM EST

Join our product and engineering teams as they discuss what products have shipped today!

Read the blog posts: Introducing Services: Build Composable, Distributed Applications on Cloudflare Workers , JavaScript modules are now supported on Cloudflare Workers , Automatically generating types for Cloudflare Workers , Developer Spotlight: James Ross, Nodecraft , wrangler 2.0 — a new developer experience for Cloudflare Workers

Visit the Full Stack Week Hub for every announcement and CFTV episode — check back all week for more!

English

Full Stack Week

Transcript (Beta)

All right, hello and welcome to another day and another episode of what we launched today at Full Stack Week.

For anyone who tuned in yesterday, I'm Kabir Sikand.

I'm a product manager on the Cloudflare team and we'll go around and do some quick intros.

But before we do, today's segment is primarily going to be about, as the title might indicate, we're going to talk a little bit about all the things we launched and announced and talked about on our blog during Full Stack Week.

So without further ado, Ashcon, want to give a quick intro? Yeah, sure. My name is Ashcon Partovi.

I'm also a product manager on the workers team and I primarily focus on our developer tools, including Wrangler, which you'll hear more about today.

So really excited to be here. Jon? Yeah, Jon Kuperman. I'm a developer advocate working closely with Ashcon on developer tooling stuff.

Yeah. Kristian? Hey, Kristian Freeman.

I manage our developer advocacy team and yeah, today did some stuff showcasing a great developer in our community and we'll be talking a little bit about that during this call.

Should I go like kind of intro Ashcon and start asking you questions or do you want to talk about...

I changed my mind.

Why don't you start and then I'll ask questions. I can talk a little about today's theme and kind of what ties all these announcements together.

I think today, really what we wanted to focus on is a really great developer experience and improving the experience for developers using the workers platform.

Generally, we think that while new features and kind of innovations on top of the platform are really important, it's even more important to have the fundamentals, the day-to-day of developers using and interacting with the platform that has to be really smooth.

And so that's why kind of the set of announcements today, while both are bold, some of them are also the simpler and smaller improvements to the experience.

And I think that's really where we start with Wrangler 2 and all the things that we're working on there.

I don't know, Kristian, if you... Yeah, this is kind of a weird thing because I almost introed myself into the next portion.

I got you. Teamwork.

We'll figure it out. Yeah. So I'm also super... This is one of my favorite announcements of this week is Wrangler 2.

Like with everything else here, and then we're going to do this throughout the week.

We have a blog post to accompany each of these things we're talking about.

But Wrangler 2, I think it'd be safe to call it like a sort of public alpha.

Is that kind of how we're characterizing it, Ashkahn?

Or what's like the terminology we're using? I characterize it as a work in progress beta for V2.

And basically, so some of the motivation behind Wrangler V2, we're not just following the SemVer rules here.

There's a really important reason why we decided to cut a V2.

And that's when we look at the evolution of the platform going from scripts to what we'll describe later in the session, services and environments.

We wanted to make sure that the core building blocks of Wrangler, like the core experiences, things like running your work locally, things like testing and publishing really quickly.

We wanted to make that really polished.

And it took a revised approach. We had to look back at the drawing board for some things.

Because we really wanted to improve how it works. So, for instance, one of the big features of Wrangler 2 is a totally revamped Wrangler dev developer experience.

So, if you're not familiar with Wrangler dev, essentially what it does is it allows you to test and run a worker, debug a worker, on your local host, but it's actually running on Orange.

And previously with Wrangler 1.0, there were some challenges with that.

While it's realistic, sometimes it can be a little slow.

Sometimes the file watching, when it listens for changes in your code locally, can get out of sync with the edge.

And so, we wanted to spend a lot of time to revisit that experience and get it right.

Because we heard from developers that Wrangler was great for publishing their scripts and updating their scripts.

Some of the more hands-on features needed a little more work. And so, with dev, what we've also done, and this segues into another part, is integrate with Miniflare.

And so, Miniflare is a way of running, it's a project that recently joined the cloud for organization.

It was actually started by an intern that joined us last summer, Brandon.

And essentially, it allows you to run workers, emulate workers locally in Node.js.

And that's a really great experience.

The community has absolutely loved it. And there's been a lot of great improvements to Miniflare before and after it's joined Cloudflare.

And so, we wanted to tie these two experiences together, both running code on the edge on our network and running code locally, in a way that it just works with both of them.

And you can switch easily between the two modes.

And that's a really big part of the Wrangler dev experience that we're trying out with Wrangler 2.

Yeah. I think that's probably the biggest thing for me that I found really exciting about Wrangler 2.

I've been here for, I guess, like two and a half years now, a long time in Cloudflare terms.

And a thing that I think we maybe didn't quite understand when I joined and we were early on Wrangler is, I think we had a very binary perspective on this stuff where it was like, some people were like, oh, we need a local development experience.

People want a unit test, they want to just work offline, stuff like that.

And other people would say, oh, it needs to be on the edge, we can't replicate that network scenario or whatever locally.

But I think what we've discovered is it's more nuanced than that.

You need both for different situations, or at least you want to have the ability to reach for both of them.

And so, I think that knowing that and what we've learned from seeing how much MiniFlare has been a success and stuff like that, I think that we've definitely taken the right approach there.

Is that how your team thinks about it, or what do you think about that? Yeah, it's especially relevant to the development life cycles.

So, MiniFlare is really great for iterating really quickly.

We're working on improving the edge development speeds, but it's really hard, to be fair, to compete with your local host.

And so, sometimes it's okay to run your code on your local host as you're in the weeds of developing, and then transition to an edge preview once you actually want to get an end-to-end test of something.

Unit testing, you mentioned, is another thing that MiniFlare does a really good job of, and it's hard to pull off on the edge.

It's hard to get a way that you can easily have your unit tests be local, but run remote.

It's something we want to tackle, but in the meantime, it's something that MiniFlare and the new BrainFortwo integration works really well, and it's something we're going to be improving upon as we go on.

We don't really want to see the remote and local experience be two different things.

We want it to be one, that the same tools, the same configuration you have for your remote code and your remote debugging session is the same locally.

So, we definitely see it that way, yeah.

Yeah, for sure. So, I guess the call to action is, as far as I understand, in the blog post, you can go and install this and start working with it today, right?

What do you recommend people do to get started here?

Yeah, so, Wrangler, we've launched a beta tag release on NPM for Wrangler. We have a really simple one-liner install that you can just run and test it.

Another benefit and new thing that we're working on with Wrangler 2 is no configuration.

So, one of the points in our blog post is no configuration, no problem.

So, really, all you need now is just a JavaScript file, and that is it to be able to run a worker.

And while it seems like a small thing, it's a really big deal to be able to get started with just that.

You don't even have to decide on what you're naming it or anything like that.

So, you can use Wrangler at beta. So, you can do NPM install Wrangler at beta.

If you already have Wrangler, Wrangler 1.0, you can use MPX, which allows you to also easily run it.

So, MPX Wrangler at beta and run all the different commands.

We definitely encourage if you are using Wrangler 1.0 right now in production or in your kind of CIC flows to stick with that for now.

We're working on a plan in order to migrate Wrangler 1.0 to 2.0 in a way that just works and that you don't have to worry about rewriting all your configuration or some brand new format.

So, it'll all just work, and you can keep track of our progress on the Wrangler 2 repositories.

We made a separate repository for Wrangler 2 as we work on the beta, and then once that's done, we'll merge it into the original repo.

So, you can track our progress there. But definitely try it out.

Give us some feedback. You want to start a new project on the weekend, use Wrangler beta.

Let us know how it works, what you think, what we should add to it, what we're missing.

The GitHub repo has a really good rundown of the different tickets and areas that we want to focus on, and we'll be really active in looking at maintaining those.

So, definitely stick around and stay tuned as we develop it, and we'll update everyone when it's fully ready and they can do the final cutover from 1.0 to 2.0.

So, do we want to maybe I'm looking at the time, maybe you want to switch gears to talk about the next post types for workers?

Yeah. I've been just to cap off on that, I've been playing with it all day, the new Wrangler 2.0.

It's very, very fun.

It's one of these like very I don't know, it simplifies some things, it adds more options other places.

I think it's just very well designed. It's a really good time.

But yeah, I'll start off with the types for workers. So, a little bit of context before I talk about what we did is that we have our workers runtime, which is like a big C++ code base, and it exposes all these APIs, which are the APIs that you get to hook into when you're building workers.

And we have type packages, both for Rust, we have a workers RS package, and then for TypeScript as well, we have a workers types package, to help with editor auto completion and pre compilation, everything like that.

And so, historically, the way that it's done has been a manual process, so that will change something in the runtime, and then some won't make a ticket, and then we'll go through eventually and update the type definitions in these two packages.

And so, kind of the two problems that we were running into are types getting out of date.

And probably the bigger problem is that we have a lot of APIs that we support a high percentage of methods on.

So, we type them out so folks get all these benefits, but we might not have 100% spec compliance support for those APIs.

And so, this led to this process where folks would build an app, and then in their editor, TypeScript would compile saying, yep, that looks totally great, and then they would deploy it, and then they'd get a runtime error that's like, actually, you can't call this abort controller method or something like that.

And so, the project was actually done by the same intern who did Miniflare, is basically a script now that will go through and it will grab all of those types off of our private C++ runtime code, and it will generate an abstract syntax tree of all the type data, and then it will publish that live.

And so, we publish three things now.

We'll publish the Rust and the TypeScript definitions, so those will be up to date, and they'll also be way more accurate now that they'll actually specify all the methods we do and don't support.

And the other thing that we publish alongside of it, which I'm really excited about, is the IR, the Intermediate Representation itself.

So, we've got this big JSON file with all the type data, and then we've got another JSON file with the schema, the format of it.

And so, that should open the door for its exact file we use to generate our types.

Anyone else, whether internally to Cloudflare or out in the community that wants to generate their own types for any other language, you know, Go, ReasonML, anything like that, should be able to do so.

So, I'm really excited about, we have these very up-to-date accurate types.

That's amazing, a huge win there, but I'm also looking forward, very excited about reaching out to other communities, seeing what other languages people are using, and kind of reaching out and trying to work together, collaborate to build more and more type packages for whatever you're using.

Yeah, I think the aspect of this where we're not just publishing the TypeScript, you know, the final TypeScript or the final Rust, where it's actually, you know, you can use the Intermediate Representation and add that to different languages.

And certainly, as John said, if anyone really wants, you know, Go or any of these other languages to have, you know, really great runtime types, you know, chat with us and we'd be happy.

Yes, please reach out. Yeah. Yeah, and I think it's like a very cool compromise of, you know, you've got some very sensitive code that you don't want to be open sourcing, but you also want to be like a really good open source community member and steward.

And so, I think it's like this very cool extra step for us to say, hey, not only are we improving our product, but we're also giving you as much as we can, right?

Like, as much as we can give you. Like another comment that I heard today was like, oh, we should update our documentation to read from the IR.

So, like, why are we manually updating our API docs now that we're publishing this thing?

So, I really hope we can keep using it. You know, we'll have this one file that's open source.

It's what we use for Rust, what we use for TypeScript, what we use for docs.

If people add other language support, I think that's like a very, like a step in a great direction where we open source everything we possibly can without, you know, kind of compromising any security.

So, yeah, really exciting there. There's also definitely a side story to this, which is that, you know, one of the things we looked into at the beginning was, you know, can we just adapt the existing web worker or other types out there?

And as John mentioned earlier, it's hard to become spec compliant with a lot of these APIs.

It takes a lot of time. And sometimes when we want to launch a new feature, you know, we'll release maybe a certain set of APIs that we think, you know, developers will need the most and that we kind of plan later to get to the rest of it.

But this is also a great function for us, Cloudflare, to be able to look at the existing APIs we have and identify the places that we can become more spec compliant.

And we are starting to revisit some of our old JavaScript APIs or JavaScript APIs we implemented a while ago and make sure that they're kind of up to date and spec compliant.

You'll, I'm sure, be hearing more about that, you know, as time goes on, as we make those improvements.

But hopefully, a great side effect of this is that our types actually become closer to the actual spec.

And so, you know, you have that's running the browser.

And if it uses one of the standard web APIs, you're confident that, you know, it just works on Cloudflare workers.

So, I think we'll also be improving that as well. And then just kind of another follow-up on that.

If folks are building things and running into APIs that we're not supporting and are polyfilling or working around them, also please reach out to us.

We're really, we're trying to conquer as much as we can there. Like Ashkan said, there's a lot of work and it's really difficult.

So, any kind of user stories of like, hey, this framework, you know, needs this, you know, fetch method or hey, this library that I'm using, you know, uses a web API that's not fully supported, please reach out to us in any way on Discord or Twitter or whatever.

We'll really try to work with you on getting that stuff prioritized.

Awesome.

Okay. So, can we move on, I think, to the next one. I wanted to talk with Kabir a little bit about services.

Could you tell us a little bit about what services are?

Yeah. And I'll tag team this one with Ashkan, because there's a lot of work that both teams of ours put into this particular effort.

But a little bit about services.

In the blog post, you'll see very early on, we kind of introduced the impetus for why we wanted to build this.

And really, we're thinking about this as the next evolution of what a script is.

So, classically on Cloudflare Workers, really before today, you would deploy scripts to the edge.

There would be JavaScript code that you would just deploy onto Cloudflare's network and we'd handle the rest for you.

Starting today, we're introducing a new higher level concept of services.

And so, what a service contains is not just your scripts, but it has a series of environments.

All of those environments can be added or removed by our users by default.

Today, every single one of your scripts have been moved over to a production environment.

The way we envision our users using this are things like adding a staging environment, adding a development environment, maybe adding a canary or like a blue-green, depending on your deploy strategy.

And there's all sorts of things you can do from there just using that flexibility.

Within each environment, we then have a series of deployments and you can point to specific deployments as being active.

You can see kind of where we're able to go from there. It's a really great building block to be able to do really interesting deploy strategies natively within the Cloudflare workers ecosystem.

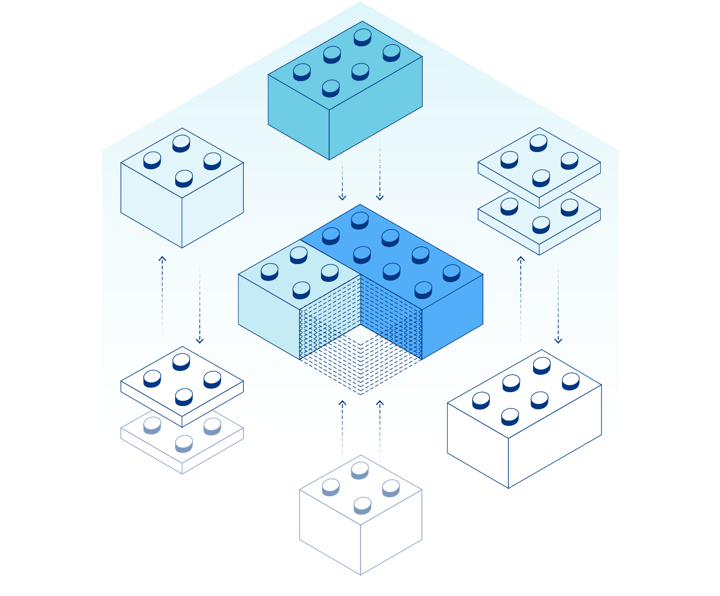

And finally, one of the other exciting things you can do with services is we've really started to build this to become a more composable system.

So, you can have services call other services.

The way we've implemented that is via a new API, a binding called service bindings.

It's very similar to what you're used to if you've ever used our KV or durable object bindings.

You'll just define a variable name and you'll be able to fetch on that, pass a request in, await on that request, and do whatever you need to in your other service.

So, this really kind of opens the floodgates for you to be able to migrate from kind of a monolithic approach to segmenting your services out, doing things like versioning, doing things like promoting from staging, from dev to staging to prod, kind of the classic ways that you'd imagine development on a modern web platform.

Yeah, I think that's another great example of like one thing I love about the way you all work is that we don't just go out and build products.

We build these really nice abstractions or building blocks, primitives.

Those are words that I think people use a lot when they talk about Cloudflare things.

And you can see us moving towards these really great products, but it's not just like, oh, we did a thing.

It's like we're giving you the ability to really customize and craft your own experiences, which I just love.

I think that's very cool. Yeah, I think one of the things that we were thinking about when we were first envisioning what environments would look like and trying to understand how we can make that developer experience better, a lot of different teams set up their environments in a lot of different ways.

And it's a tricky balance between providing a really high-level experience and like a low-level tool or a low-level building block.

I think what we get really right is knowing what parts of the platform need to be kind of lower-level building blocks and what parts of the platform can be kind of higher-level abstractions that just work.

I think environments is somewhat in between those two, where you can create as many different environments or different types of environments as you want.

You can have a staging KB namespace or different secrets in one environment.

There are all sorts of ways you can do it.

You could have one environment per team member. You could decide to just have a single staging environment.

It's really up to you, up to your team, how you decide to use it.

However, the ability to promote and copy between environments is so easy that it really doesn't matter how you set it up because it just works really seamlessly.

And when we tie that with service bindings in services, it also enables a lot of really interesting capabilities, like being able to kind of balance between two environments or two services.

Yeah. So really, really quite interesting, the use cases that you're going to be able to do.

With the service findings and the services abstraction itself.

One thing to note is that it's a little different from kind of your classic microservices architecture in terms of how we built this, right?

It shows off some of the power of isolates and the pipelines that we've built on top of our runtime and our workers platform.

And it integrates in the way that we're already utilizing these same technologies internally.

So tools that Cloudflare uses, we build on top of workers fairly often. And we wanted to give that power to our users, the ability to kind of segment that code into different areas and really talk to each other within the same COLA, within the same data center, within the same metal and not have to worry about the latency cost.

There's a really interesting, and this of course ties into the theme of a full stack week, with service findings and services, to be able to think like services where teams have these independent sets of applications or software that they deploy that can be modularized and work independently yet have the performance of basically a library.

And the abilities and possibilities that open up with that are just huge.

And being able to just have that architectured in all your services stitched together into kind of that one pipeline or one deployment is really powerful.

So we're certainly, there's certainly a whole list of teams, even internally at Cloudflare that are lining up and ready to try it out.

And we can't wait for you all to as well. Yeah. So just to kind of be mindful of time, I think we could talk about services and service findings for a whole 30 minutes here, because it is pretty exciting.

And I'm sure we'll have some guests on to talk about what they've built in the future.

But two other announcements that we had today, really quickly.

Ashkan, do you want to talk a little bit about the JavaScript modules that were announced earlier today?

Yeah, sure.

So we introduced JavaScript modules originally, well, we had given a sneak preview of JavaScript modules with durable objects, and being able to define a durable object with the class.

We finally taken that approach of using modules to define a worker to the rest of the platform.

So now everyone can use modules, regardless of whether you're using durable objects or not.

It's a really, you know, check out the blog post.

It's a really simple API. All you have to do is define a default export.

And you can import different libraries, you can export different event handlers.

It's really simple. We've taken a lot of thought into designing it and making sure it's really simple.

So in terms of JavaScript standards, it should just work.

So we're pretty excited about it. And you know, if you run into any issues, let us know.

But at least internally and kind of in Cloudflare, once people start using modules, it's really hard for them to go back to the Service Worker API, which we will continue to support, by the way, you can still upload and use the old Service Worker at event listener APIs.

But we think modules are a really cool experience.

We encourage you to try it out.

So that's what's new with modules. And it's kind of a nice celebration, because it took us for absolute ever to get here in the JavaScript ecosystem.

So it's here, we have it, we have a real API for modules.

And it's awesome to jump right on and start using.

Yeah, and I feel like from the developer advocacy perspective, we've been thinking about this stuff a lot too, and excited to work more with Ashkahn's team to have first party, I guess, for lack of better term, support in our documentation, our examples, and stuff like that for modules.

Yeah, I really enjoy writing with them.

I remember when I first saw the first couple module workers, I guess it was around durable objects.

I was like, oh, this is much easier to reason about, at least from my perspective.

So yeah, so should I maybe I guess we were like running short on time.

So let me get home. Let me cover the last thing here.

So we've been publishing and we'll be continuing to publish we have, I think, five of them in total.

These developers spotlight posts throughout full stack week.

We have a ton of really incredible smart developers building stuff on the platform.

And we wanted to highlight all kinds of different size, like companies and like solo developers, and basically just anyone doing interesting stuff with the platform.

And so today's was focused on NodeCraft. NodeCraft is a Minecraft hosting company.

Well, they do a bunch of other games too, but I think Minecraft is kind of their most popular one, which is, in talking to them, surprisingly resource intensive and infrastructure intensive.

It's pretty, pretty crazy to hear about the kind of scale they're dealing with there.

But in particular, like focusing on workers and pages, and just talking about the stuff that I really love to kind of nerd out on with people about, like how building with workers has really changed their like, literally the way they think about building applications.

So they very often like going workers first and trying to go like Jamstack first and things like that.

They were very early users of workers sites and literally are like contributors maintainers of our KB asset handler package.

So super involved with all of that stuff. And, and I know from talking to them, like there's a lot of things coming up that they're excited about as well.

So it's really an interesting, an interesting read. The part I think that was most interesting to me is they basically have built out a bunch of APIs for, it's funny, like things you wouldn't even think are that big of a deal, but it's like getting Minecraft player stats and stuff like that.

And they're handling like 80 million requests daily, like just crazy numbers without a sweat using workers.

So definitely. I was blown away to read that. I was like very surprised, but it's a really cool post.

Yeah. So thanks for all the time today, team. For anyone viewing, if you want to keep following along, we're going to do even more announcements this week.

And every day we'll have a few more topics like this to talk about.

So we'll, we'll see you tomorrow and enjoy the rest of the week.