💻 Service worker rendering, in the cloud and in the browser

Presented by: Jeff Posnick, Luke Edwards

Originally aired on November 16, 2021 @ 12:00 PM - 12:30 PM EST

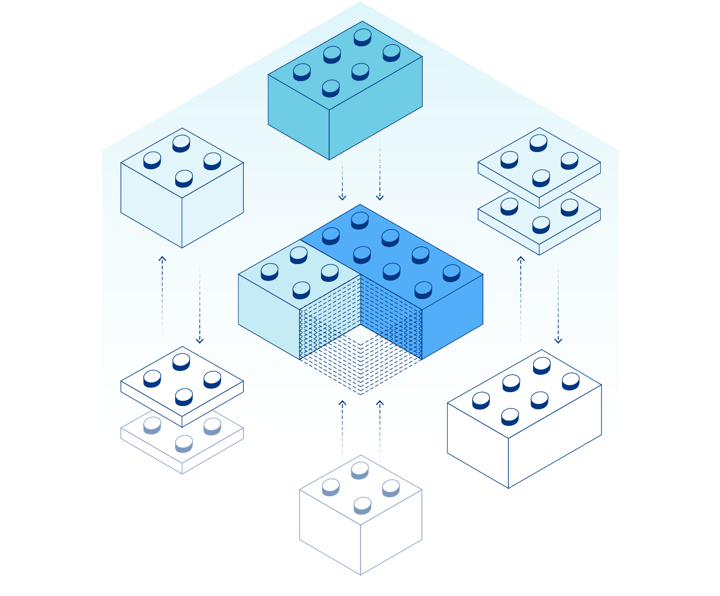

In this Full Stack Week session, you will learn how to render your site entirely on-demand via workers. Cloudflare Workers to generate HTML in the cloud and service workers to cache and generate HTML client side.

Visit the Full Stack Week Hub for every exciting announcement and CFTV episode — and check back all week for more!

English

Full Stack Week

Transcript (Beta)

Hi, everyone. My name is Luke Edwards. I am a developer advocate here on the Cloudflare Workers team.

Today I am joined by Jeff Posnick, a member of the web DevRel team at Google.

And today Jeff and I will be talking about service worker rendering, both in the cloud and in the browser.

Before we get started, Jeff's going to be walking through most of this here, driving the conversation.

But if you have any questions throughout the talk, please write in to livestudio at Cloudflare.tv, and we'll try to see them and answer them as we go.

So, cool. Jeff, you want to take it away?

Sure. Yeah, happy to dive in with some thoughts and hopefully a nice back and forth conversation.

So, my history with service workers dates back a long time, actually, almost to the inception.

And I've been working on a lot of tooling related to making it easier for developers to build service workers and to kind of think about the routing and the cache logic.

But this has been browser-based service workers.

So, going back to like 2014, 2015, I was working on a couple of projects called sw -precache and then later sw-toolbox.

And they kind of got folded together into the umbrella project that we call Workbox and rewritten a bit.

Three of them majorly useful in the entire ecosystem, for the record.

I appreciate that. And it's been kind of weird because the ecosystem around service worker tooling and kind of service workers has been historically kind of small.

I mean, partly I hope it's because people find Workbox useful. There are a few other libraries out there that do similar things.

But a lot of times if people are integrating with service workers, first of all, service workers are only in the browser and they've been using Workbox.

And a lot of what we've been thinking about is, okay, what does it mean potentially to have service workers that are doing more than just kind of the common caching operations that a lot of people think about when they hear service workers?

What does it mean to actually generate HTML, respond to navigation requests, do more than just what the browser could do already via the browser's cache?

And yeah, a lot of that conversation has been, I think, kind of blocked a little bit by the fact that they've been in browser-only runtime.

And the other half of the story, especially how you respond to navigation requests, how you respond to requests for HTML documents is so dependent on your server.

And what we've seen with service workers is the real performance wins.

And then also beyond performance, just like the offline functionality that a lot of people associate with service workers really requires a tight coupling between what your service worker is doing and how your HTML is generated.

So the ideal case is you make a request for any arbitrary URL within your origin after the service worker's been installed locally in and the service worker knows instantly how to respond to it.

It doesn't have to block on the network in order to say, okay, let's contact this PHP backend or JSP backend or whatever else to get the actual content.

It's able to generate all that locally, usually a lot faster than it would take to get a response from the network.

I know that might be a little controversial, given that you all have very fast networks and stuff like that, but still, people have reliable network connections.

Even if you have a really good edge server, even if you have a really good infrastructure, if you are on, traditionally, I'm in New York, we usually talk about being on the subway as being the time when you have flaky interpore, no Internet, but it's actually gotten pretty good lately.

But it's still the canonical example. You have to address it.

Yeah. So if you're in that situation, even if you have a really good backend infrastructure, it can take time to respond to that navigation request for HTML.

So we want to promote architectures where the service worker, after it's been installed locally in the browser, knows how to respond to pretty much any navigation request.

And yeah, what we've seen catch on, historically, is very focused on the single page app use case.

And that is because there's just a really good model for mapping that architecture to figuring out how the service workers can respond to navigations.

And that's by using what we call an app shell. And that app just has basic skeleton, sometimes skeleton loading stuff for your application.

But basically, HTML that can handle any page on your site. And that'll load the HTML.

It'll bootstrap the single page app logic. And then that single page app logic kicks in and will actually render the contents based on your routing for the given page.

Sure. Can we quickly bring this back to a more... Not that we're high in the clouds, but let's bring it back to a more tangible example for people who may not be front end developers.

And Jeff, correct me if I'm wrong here.

So what's happening is you download a website, you visit a website, and then while visiting it, some of the assets for that website may include a service worker registration.

And what that is, is browser then goes back and fetches this special script that installs itself in the background.

And while it's there, it's basically a barnacle on the page, or a barnacle on a page that is listening and watching everything that goes on.

And so... Sure. Barnacle's an interesting analogy that I've heard before, but sure.

I'll go with it. Yes. I am sometimes known for my weird metaphors, but sure.

Barnacle. But it's a lot more useful than a barnacle.

So it sits there and it listens to what goes on. And because it has a window into the activity that's going on, especially network activity, it can intercept network requests.

And as you were saying, it can generate HTML responses directly on the phone, the tablet, the computer, instead of relying on a server to come back with the response.

Good so far? Yeah. And a lot of people hear that and they're like, okay, well, the browser's HTTP cache can already do that.

So what's the difference? And the truth is, there is a lot of overlap. Service worker and the browser does give you some logic around fallback stuff.

Like if you are offline and there's an image and that image isn't already cached either in the browser or anywhere else, you maybe could have fallback logic to use a generic image and stuff like that.

But yeah, really the focus and the main differentiator between what you can do with a service worker that you previously could not do with the browser cache is that responding to navigation requests, responding to those initial requests for a given URL with HTML.

So that's what we try to focus on.

And we do hear the same thing about workers cache and the CDN, because it is the same, more or less the same topology, the same relationship.

So the CDN itself is browser cache, it's more HTTP cache, it's more stagnant, but workers is a programmable, programmatic cache that you can interact with and react to and do dynamic content with.

Yeah. Yeah. Cool. So back to spas, I had interrupted you at app shell.

So with an app shell, while you're listening, while this barnacle is listening in on your activity, it can intercept the actual request itself, but because it's all happening on the device, what that must be ideal for offline scenarios too, right?

Yeah, totally. And it's really easy to figure out how to handle.

It's not even offline, it's just network independent. So even if you have a network connection, you just don't have to use the network connection in this model, but you respond right away from the cache.

And like I said, you have a very clear model for what HTML you're going to respond with.

And it's this static app shell that you can use for any given URL.

So you don't have to worry too much about routing.

You have to worry about routing, but it's the same routing concerns that you have in your client-side SBA anyway.

But not everybody's building SBAs.

And we've struggled to figure out, okay, what does it mean to take all these advantages, performance advantages, the offline advantages that you get with the app shell model and bring it to non-SBA use cases.

And so that's what I've been looking into and kind of just playing around with these bespoke solutions.

And that's, we'll segue into, I'm super excited about the Cloudflare worker environment and talking about how that can be used to coordinate some of the HTML generation between both the service worker that's running in the browser and then also the service worker that's running at the edge.

So I've played around with some solutions.

I gave a talk, I think, in 2018 at the Google I-O conference that was beyond single-page apps and alternative architectures that you can use.

And in that case, it was using a backend that shared a lot of the logic with rendering code that could happen in the browser's service worker.

But the backend environment was not a service worker environment.

It was kind of just like a serverless function type environment. So it shared a decent amount of rendering logic in terms of templates, and it also shared a decent amount of routing logic.

And it was actually using one of the libraries that you worked on back in the day.

So I chatted with you way back when about the Regex RAM library that we were using to actually handle the routing.

And there would be routing rules that would apply to the server and templating rules that could be reused on the server.

And then in the browser service worker, tried to share as much code as possible and use Workbox for some of the caching logic and some of the streaming the HTML response generation logic.

But it still felt kind of awkward, honestly.

It was not exact same code. It was maybe like 50% code sharing between the serverless environment and the browser service worker.

And anytime there's a gap, anytime there's a difference between what's going on in one environment and other, you have this window for things to go wrong and for things to get out of sync.

And the worst thing, the last thing you want to do is try to spend time debugging, okay, why do users see two completely different HTMLs being rendered depending upon whether they're hitting my backend because they don't have the service worker installed locally yet, or whether they're doing service worker rendering because they're a repeat visitor.

So you don't want that tripped at all.

You really, really need to share as much routing and templating logic as possible.

So that's kind of where we were at, at least in how I've been thinking about it for like, you know, three years or so.

And then, yeah, I started playing around with Cloudflare workers and realized that, hey, you know, I don't want to say it's the exact same environment.

There are some differences in how, you know, you purchase caching in a Cloudflare worker versus how you would approach it in browser-based service worker, but they are, you know, so much more similar.

So that was exciting. What are some of the differences you still have to keep in mind with dealing with the cache?

Yeah. So it's kind of thinking about deployments and what ends up being cached after each deployment, what ends up being updated after each deployment.

So if you're in a scenario in which you have a lot of code shared and you have a lot of the routing shared, the one thing that I found I needed to stub out was the actual code that interacted with, I guess it is the Cloudflare key value store.

I hope I'm using the right term. Yeah. Workers KV. Yeah.

Right. So, you know, you make some changes, you have some static assets and just, you know, give some context and we'll do a little bit of a demo of this later.

I've been playing around this in context of my blog. I find, you know, every developer has a blog and they always kind of use it for architecture experiments and optimizing things like that.

So I definitely fall into that category as well.

Well, at least yours is built. Mine is not built. There you go. Mine has been built, you know, several times at this point.

I keep unbuilding it and rebuilding it as I get excited about new architectures.

So, you know, I feel like this one's here to stay.

Like I'm feeling pretty good about this one, but yeah.

You know, so because it's a blog, you know, there's some templates. This is actually using another library that you worked on called Tempora.

I was like, yes, this seems like a nice lightweight library to use for and I, for the record, sorry, that came out of my current rework of my blog that does not exist.

There you go. Yeah. But, you know, I'm glad it was useful to you, but, but it's paying dividends even if it's not used on your own blog.

So yeah, so there's, you know, content for my site and I kind of have a build process for generates and Jason for each blog post and it has, you know, the actual content for the blog posts and some metadata.

And then I have some templates and there's routing logic.

And every time I make an update, you're going to do a deploy to the Cloudflare worker environment that ends up pushing things out.

It updates, you know, the actual service worker code that's doing the routing and has all the logic for rendering.

And then it's also pushing like these Jason files to the key value store.

So the equivalent is, you know, user goes to the blog, you know, for the first time say they don't have the service worker installed locally in the browser yet.

They go there, service worker installs. You kind of have to make a decision for around how much do I want to cache locally.

And that's like, that's still a decision that folks need to make based on how they anticipate people using their sites, their own content.

Yeah. You know, if you're a site that has a thousand pages, you probably don't want to cache all, you know, a thousand pages worth of content aggressively the first time the user visits a random page.

So that's, so having a different strategy for like the client service worker and the Cloudflare worker environment makes sense in this case.

You obviously need to push out everything to the Cloudflare worker each time you deploy, but you're not going to be caching as aggressively on the client.

And it's nice having that flexibility.

And that's kind of one of the areas where things do differ.

And again, I'm using Workbox. I'm actually using Workbox on Cloudflare worker environment, which I don't know if folks have done before, but that was kind of cool to see that your environment was so close to the service worker that it really did not require, it required like polyfilling the location, like self -deblocation, I think was the only thing I needed to do.

Okay. So, so for, since Workbox, sorry, I have my own, Workbox.

I know. Worktop is your library, right? I was having trouble with this recently.

Workbox is a, you know, it's the service worker.

Is it fair to call it a utility framework? Like it's, like, it's just a bunch of service worker, like helpers that help you author this.

And we've talked about it already, but because it's able to interact with the cache and specifically the service worker cache API, you effectively added an adapter or a very small amount of polyfill code so that you can talk, use Workbox within the Cloudflare worker environment to talk to workers, workers KB at that point, or to the cache API.

So it's using workers KB. The other, the other part was the adapter for workers KB, but it's beyond that.

It's also, you know, Workbox has this routing module that knows how to talk to, you know, basically knows how to implement a fetch event listener.

So we're using that in both environments as well. So the routing looks exactly the same.

And Workbox also has some libraries for doing streaming responses, which is something that I'm a big fan of in this model.

You know, where you have one of the advantages of kind of doing multi-page apps or kind of like traditional HTML in general is that the HTML streams, you are able to kind of progress while you render things, which is important for large pages.

And you can do that inside of a service worker as well.

So I think I remember the Cloudflare worker environment maybe didn't have like the readable stream constructor for a little bit.

I'm not sure if you're working on that. So that's one of the things that this needs, but the Workbox streaming library basically has fallback behavior if it's not available.

So yeah. So you can basically structure the code and, and, you know, we might be getting to the point soon where I actually show it instead of just talking about it.

But you can structure the code that looks, you know, almost identical in both environments and then just swap out, you know, kind of a one piece of it is interacting with the browser's cache storage.

And one piece of it is interacting with the key value store, depending on which environment you're in.

Nice. I guess one more question before I let you dive into the code, cause we do have about 10 minutes.

I want to make sure there's enough time for it.

So is the Cloudflare worker, is it always, is it always a returning an HTML stream or does it depend on what the service worker is asking for?

So. Ideally it will always provide the HTML as a stream. And it uses partial templates to kind of like have, you know, the head that is just a direct read.

The body requires a little bit extra processing. So sometimes that, you know, it's nice to get the head completely out first and then kind of the foot.

Like I mentioned I will, I think initially when I started playing with this the Cloudflare worker environment was missing like constructible readable streams, which is one of the prerequisites for actually getting streaming in the Cloudflare environment.

So I think as soon as that, I don't know if it's been implemented. I haven't been keeping up on all of it.

It's definitely been worked on. I think it's almost out the door, but yeah.

So as soon as that happens, it should get the full stream.

But I think the main thing is like it's mainly a performance improvement in the browser's service worker because the browser may not have the body of the post available and might have to go to the network for that.

So because you have streaming in the browser, you always get that head out first so you're not staring at a blank screen.

In the Cloudflare worker environment, it's reading from the key value store.

So it's going to be a pretty fast read. I'm happy to show off maybe a little bit of the code and then some of the actual behavior though.

Yeah, let's do it for sure.

Cool. Okay. Let's see how the stream sharing works. Hopefully.

Please be with us demo gods. Okay. Are you seeing my GitHub repo? Yes. Cool.

Okay. So yeah, my blog is jeffy.info and here's the repo for that. And yeah, I'm going to maybe dive in.

There's kind of interesting build process and a whole bunch of other stuff that's not really super relevant.

But you know, the main thing is I have a site, I have a bunch of posts, it's marked down, things get generated from the markdown adjacent that we were talking about.

So I'm going to actually instead of focusing on that, go to the code for the service worker.

And two different kind of targets here at the, you know, the browser service worker, I have the Cloudflare service worker, and then some shared code.

And this all ends up, I've been using the kind of environment where you like, I use ES build, and I ship like a final built service worker to Cloudflare.

I know there are a few other ways of doing it.

But here, I'm going to pull up the Cloudflare service worker first.

Here's that polyfill that I was just mentioning. And okay, that's not too much at all.

Yeah, so most of it is actually the Cloudflare specific stuff is just like static loader, doing stuff for get asset from key value store and returning response, given a URL, and then registering some routes.

So not a Cloudflare, it's like, this is the, you know, 49 lines of Cloudflare specific code.

And then the browser specific code is also, are we at exactly 50 lines? Yeah. So slightly different, slightly different logic for doing some browser specific stuff.

It's like stale while we validate strategy for the post. And this is again, just, you know, it's, you want to handle things depending on whether you're running on the user's browser, or running, you know, on a server, but not very much difference here.

And then it just has a lot of shared code. So here's all the common stuff that's using Workbox.

Again, it's using Workbox routing, it's using Workbox streams.

I'm using this really cool new web platform primitive called URL pattern, which, you know, it's actually kind of a replacement for your regex param library from many years ago, but it's a way of doing routing that, you know, is kind of native to the browser.

But we have a polyfill as well. And here's where most of the magic, you know, happens, you know, have a streaming strategy going on.

This is, again, building on top of Workbox primitives.

And just everything in this list ends up being streamed, you know, in that order, but the requests for these things can happen asynchronously.

So you're not really blocking anything. So we have templates that have the start, we have some logic here for actually taking a look at the, you know, event with a given URL, we obviously respond with different content for different URLs, we need to match against that.

And this is all kind of, this load static is what gets, it's almost like injected, you know, a different method for the browser service worker and a different method for the, for the Cloudflare Workers.

Good. And then we have an end. So you can imagine, and we have, yeah, so that, sorry, this is actually what I just had previously was code for static assets.

This is code for the actual HTML requests, but it's doing the same thing.

Yeah, yeah. Templates that page there. Yep. And the main things, I was just gonna, sorry, I lost my train of thought for a second.

There. All good. Yeah.

Do you, sorry, go ahead. I was just gonna say, I could show what this actually looks like in terms of network traffic.

Yeah, for sure. Let's, let's do that. We have about five minutes left.

And I know there's cool stuff going on here. Cool. Okay.

So I'm actually already visited the site once. So I have my local service worker installed.

I have a little bit of stuff in the browsers, cache storage. This is now.

Okay. So I have a few things cached. You can see it kind of has like some specific JavaScript and CSS.

It has some content cached as well. But the next time I visit, actually, I'm going to just flip really quickly to offline.

So I have some logic in here that will take a look at the current state of the local cache and kind of give it a little bit of a UI cue as to which subpages are likely to work while offline.

So this is one I went to before. You can see there's a bunch of runtime requests for some logging and things like that that fail because you are offline.

But the actual content is still there.

And there's also a little bit of fallback logic in here for pages that you happen to go to where you don't have necessary content cached.

And that corresponds to the fallback logic that we had in the service worker.

But yeah, I think the important thing is you have a very minimal amount of data that needs to be transferred once you've cached most of the head and the footer independently.

The only thing that needs to be transferred is the actual body of the page to browser service worker.

And you have that network independence, you're able to work offline.

The first renders before the browser service worker is installed happen very, very quickly in a Cloudflare work environment.

And everything seems to work pretty well. Nice. Could we maybe capture the network traffic between page routes?

So going from the home page again to a new article?

Yeah. I don't know. Preserve log. So let me just do it in this direction.

Yeah, from that direction. So it's actually because it's doing a stale while revalidate, there is this revalidation request that's going on in the background.

So that's the little gear icon that you see next to that.

So it is doing some updates that don't block the actual rendering, but it's just an attempt to make sure things are right.

It's all happening in the service worker.

But if I look at the size column, like so much of that is happening in a cache, right?

Like it's just the browser checking against itself.

It's like, oh, we have this, like, you know, thanks past self for doing the work ahead.

Yeah. Yeah. And just the one other thing that I wanted to mention, we do have the flexibility here that you don't get from the optional model of having completely different sections of your site that use a completely different set of templates.

So like over here, I have a very generic, like anything HTML, you know, uses sequence and templates.

But if you have five different sections of your site that each need their own kind of set of headers and putters and all this other stuff, you could easily do that with this model.

So really love that flexibility.

Thanks. Yeah. That's a quick walkthrough. I mean, it works really well. I remember when you announced your new site, I was out walking the dogs and I did not have great cell service, but it's still loaded instantly.

And, you know, I was just clicking.

I was like, oh, of course it has service workers on it because it's Jeff.

But it worked. It worked really well, really snappy. And I was in like what was probably felt like a 2G like area that I was in.

Yeah. Well, that is the promise of service workers.

And again, it's been great just playing around.

I feel like this is only the start of what's possible. And I'm hoping, you know, more frameworks seem to be thinking about service worker environment as a runtime cloud for workers, certainly.

And like once you have that, bringing it to the browser service worker as well is kind of a natural path forward.

So I'm looking forward to seeing what happens.

Sounds good. Well, thanks, Jeff, for joining. Where should people find you?

So I'm at Jeff Posnick on Twitter. And yeah, you can see me tweeting about a lot of service worker stuff usually.

Sounds good. Thanks so much, Jeff.

This was awesome. Awesome. It's been a pleasure. Cheers.