💻 Jamstack gives you wings

Presented by: Obinna Ekwuno

Originally aired on November 16, 2021 @ 11:30 AM - 12:00 PM EST

Cloudflare's Full Stack Week Developer Speaker Series continues with this session on the Jamstack.

The Jamstack architecture allows for frontend developers to approach development in a way that would have required knowing a bunch of stuff that might be outside the scope of frontend development. This overhead is abstracted to 3rd party services and serverless, and this helps the dev focus on just the thing they know best. The frontend code. In this talk we will look at some of these services and tell the story of how they all work together.

Visit the Full Stack Week Hub for every exciting announcement and CFTV episode — and check back all week for more!

English

Full Stack Week

Transcript (Beta)

Hello, everyone, and thanks for tuning in. So today I'm going to be talking to you about how the JAMstack gives you wings and how you can be the front-end developer you've always wanted to be.

So if you're like me, a front-end developer, the first time you heard about the JAMstack, you may have been wondering, what is this magic and how can I optimize this?

We're going to focus on that more in this talk. My name is Obinna and I'm a weekend filmmaker and also a developer advocate at Cloudflare.

I work on the advocacy team and I try to advocate for serverless and the JAMstack as well.

So this is where we're going to start in this journey. I'm going to think about the way things evolve over time.

And as you can see here, the homo sapiens have always been very hungry fellows.

Because if you see from the beginning of this photo, you can see that he's just a monkey eats a lot with a banana.

And when he gets to his final form, he's still just the same dude with the same banana.

The reason I say this is because the same way the homo sapiens keep moving and evolving is the same way we keep doing in tech.

We keep improving on everything and we're really never really doing anything new.

We're just improving on what is and making it better and better and improving and making sure that it's more optimized and solving more problems as we go.

And you might be wondering, how is this relevant to the JAMstack?

When you think about it, the first time you heard about the JAMstack, I'm sure you asked the question, what is the JAMstack?

And you probably heard something along the lines of, oh, it's just JavaScript, APIs, and markup.

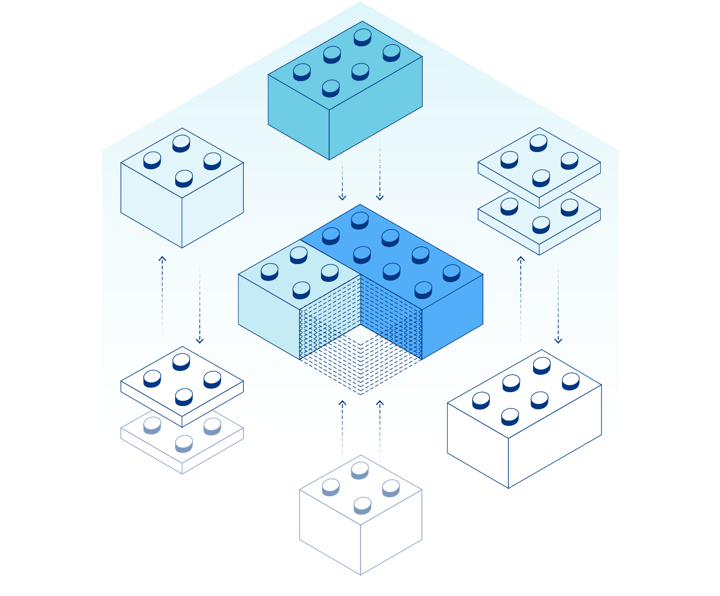

But in the true sense of the word, it's actually a web architecture, like a way of building your web frameworks that decouples your front end from your services or your back end and makes it easier for you to interact between these speeds.

And it also makes it easier for you to focus on just creating the front end applications and removing all the complexities of handling proxy servers or all of your own back end architectures and just focusing on writing the code for the part of the parts that you actually know how to do.

Like I said, as a front end developer, you just want to focus on what is there.

And since we're on full stack week, this talk is also going to explain to us how front end developers can also build applications that are full stacky.

When you actually think of the first time you built with the JAMstack, you're probably thinking, and if you haven't ever, but if you're ever going to build the JAMstack, you're probably going to be thinking of, oh, I need some JavaScript framework that does some static side generation, I need to use some APIs, and I also need to find a way to get static information into my application, right?

But I want to take it a step back and say that the JAMstack essentially is just like putting out a file with some content, like it's 1993 all over again.

And I know that you might be wondering, like, Obinna, what does this even mean?

How do you mean it's 1993 all over again? Well, somebody once said that if you've ever, for example, worked in a coffee shop and you had this website that had the menu for the day and all of that and all the things you're serving that day, and you update that site every single day by yourself, and let's say the site is just HTML served on some bare bone server and that's it, and every single morning you wake up, you have to update the menu for the day, what you're doing right there is updating the file every time you need to make a change, and that in itself, the concept is the JAMstack.

So, really, what you're doing is you're updating that file every single time you have a change, but it's all static, but it's secure because it's just you doing it, and it's more performant because it's just changing HTML, but it's not scalable.

Like I said before, we keep trying to improve the things that we have by trying to find new ways to do the same things, but to better result and make it more scalable.

So, now, if we go back to this this definition of the JAMstack as JavaScript APIs and markup, you can see that we've now moved from updating that HTML by ourselves to thinking of a way to abstract different parts of the application to different services.

I'll talk more about this as we go further.

But the gist of it all is that with the JAMstack, your front end is decoupled from the back end, your data is pulled in as needed because you're using services to pull in that information, and you're essentially not doing the updates by yourself, but using like a service that is more reliable and more scalable.

So, imagine if you had like 10 other coffee shops, you're not taking maybe your bicycle and going to like these different shops to update every single thing.

You're doing that once, and that's changing everywhere.

And you can think of your project as pieces, not as a full like a whole.

You can think of your front end as just the front end, and you can focus on building that and think of like, okay, updating your APIs and your markup like just as they are.

And you can also handle some of your most of your heavy lifting with like serverless, which we'll like talk more about.

So, let's take a step back now, focus just on the front end.

When we think of the life cycle of front end applications, we usually think of things like this, where, for example, if you had a marketing site, the marketing sites would probably send a request, like somebody was trying to request something in a marketing site, it would send a request to the web server.

And then that web server would send a request like an app server where like, okay, your back end, and then that would go to the database and get that information or go to CMS that has that information.

And then it's bundled up and go back to the web server and say, hey, this is what you were looking for.

And that sends back to like the marketing site. While this works, sometimes like it's actually slow.

And as humans, like we try to optimize. And you might hear me say this a lot throughout this talk, because as we as we keep like trying to optimize, we keep finding ways to make things better.

When the jumpstart paradigm came again, I guess it started the first time, like when it came back into fashion, it started off as like, okay, the clients, this is your computer, they shouldn't have to make all of these requests to different servers, we should just try to make a way for it to make a request to a CDN and get that information that it needs, and then be able to make requests as needed to different services, depending on what the user is doing at the time.

So we also started trying to make the user experience better, because in the heart of every business, regardless of how the key the regardless of how the business logic is defined, it's always driven towards higher user experience at low latency.

So you're trying to make things you're trying to get that information to your users as fast as you can, as best as you can.

And deploying your front end is like 50% of your of your worries gone, because when you're using the jumpstart, the way of building the jumpstart, all you're worried about is, okay, I need to build my front end application and deploy that to my user, and then every other thing will fall in place, because now you've abstracted a lot of all your other thinking, like you're handling your servers and scaling up and all of that to services.

So now it is the only thing I'm concerned about is your front end, but that's just 50% of the work.

But if you're like me, and you wonder, when I deploy this front end code, where does it all go?

What happens to it?

Where does it live now? I know that it lives on a CDN, but how close is that CDN to my users and all of that?

Personally, because I've tried to understand how this works, I use a service called Cloudflare Pages to deploy my applications.

So with Cloudflare Pages, Cloudflare Pages is built on top of the edge network as Cloudflare's serverless technology called workers.

And what this means is that with your Cloudflare Pages, when you deploy your front end to Cloudflare Pages, it allows you to be able to leverage upon the edge network of Cloudflare, which means that all your front end code lives on the edge, and by edge, I mean closer to your users.

We'll talk more about this in a few slides. But imagine if your front end was within seconds of your user's reach, and if your user is able to request your front end, and then it comes directly to them as immediately they request it.

That's the kind of feeling that you would have when using Cloudflare Pages.

And every time that people try to get information from there, because most of the information is cast on the edge, you hear stuff like, oh, that's actually super fast.

That's actually nice. And that's like the experience the JAMstack aims for.

And just to top it all off, when using Cloudflare Pages, you can add more things on top of the APIs that you already use.

So, for example, if you are using a CMS to join information, like I said, with the APIs, you're focused on your front end, and you've built out the structure for it, and you're trying to pull in information from your APIs, you can, for example, if you're using a CMS, for example, you might want to trigger builds on every time you make a change and not have to touch your front end at all.

Pages offers you deploy hooks, which allows you to just focus on making the changes to your CMS and not bother about how that information is populated or if you're triggering the right builds or if your build isn't working correctly.

So, once you make a change to that CMS and you've connected that deploy hook to your application, every time you make a change, it automatically updates your website.

You also have stuff like ability to do redirects and add security headers and also deploy presets.

So, we've optimized the majority of the front end applications that you use to build the front end frameworks that you use to build your applications to be able to run on pages without any problems.

So, that they have, like, firsthand abilities to, like, be as fast as they can be.

So, my advice to you today is, like, let your front end live on the edge so that it can be closer to your users and you can also get the most out of the JAMstack paradigm.

Just focus on the front end and making sure that your front end application is as fast as it can be.

Now, the second part of the JAMstack is with the APIs.

And with these APIs, essentially, this is, like, the exciting part because this is what gives us the superpower that we actually think of.

If you think a while back, when you wanted to build an application, we'll go back to this diagram again.

When you wanted to build an application, you would have to, like, yeah, you built your front end, but this front end needs a back end as well.

It needs a place to, like, get that all the logic of getting information from the database and getting information from, like, maybe another server somewhere and trying to, like, get that information together and then send that to your users upon their request, right?

But with the rise of what I like to call service products, we're still seeing a different kind of shift where your clients would request information from a CDN or a service, and we'll get that immediately.

A service that uses a CDN.

Mostly because it was important for people to be able to just, like I said, the JAMstack wanted us to just focus on what is important to us and abstract the things that we can.

Like, outsource the things that we can. So, services like Auth0 can help you handle, like, authentication.

You also have, like, another service called Cloudflare Access that can also help you handle authentication based on the users on your site.

So, instead of setting up your own authentication infrastructure, you can just use that service and it's, like, plug and play to have your information set up for you.

And then you can handle content with, like, CMSs, content and sanity.

Or you can just do it with markdown. But we'll get to that later.

But the point is, because you're so focused on building your front end, which is what the JAMstack architecture wants you to do, focus on the things you know and abstract the things that would usually cost you a lot of headache to services that are able to handle these things at scale and scale for you.

You can handle things like functions, search, and all of this with these architectures.

And this is fine, because this is safe to you. If we look back here, this is safe to you over ICDN and through these networks.

And that's nice. But as always, I like to wonder, like, how can we, like, because as human beings, like I showed you in the beginning, we're always wondering how can we optimize what is?

How can we add more functionalities to what is?

So, imagine if, like, there was a way to get all those services as fast as you can all around the world.

Right? So, instead of, like, relying on the CDNs that are probably just, like, in different, like, nodes around the globe, is it possible to get this information to your users as fast as they need it?

Because it's accessible to them at any location that they are in.

So, can we take it a step further and, like, make the services a bit more, like, servicey and optimize them more than they're already optimized?

Like, how fast can you really go?

And I want you to notice this important thing that you don't want to be closer to your servers, right?

You don't want to be because building with the Jamstack is one thing and deploying to, like, the services is one thing.

But you also want, like, you also want to be closer to your users, which is really important when it comes to making sure that you are offering the best experience possible.

Remember that in the heart of every business is important to give that authentic user experience and make sure that you are giving this as low latency.

And this is another part that is also really exciting for me. Because trying to push the services further has made me use a service that I'm going to share with you.

And you guessed it, it's Cloudflare Walkers. Because, like I said, we use Walkers at Cloudflare to build most of the services that we use.

And then because of this, you're able because, like I explained before, this Cloudflare Walkers is a serverless platform that powers most of the stuff at Cloudflare.

And this runs on, like, each of our edge nodes.

And what this means is that any time you deploy a Walker, that same code that you've deployed to a specific, the same code that you've deployed, is deployed all around this edge network, which kind of looks like this.

So think of it as every time you deploy a, if you're in, like, currently I'm in London, if you've deployed something to Cloudflare's network, it's replicated across these different edge locations.

So if somebody was requesting your front-end code, it will come from one of the closest ones to them because it doesn't need to go to anyone further.

It just goes to the closest one.

And then your service tool as well, your service tool, which is like your API, that is probably written inside the Walker.

Like, for example, if you wanted to run information from, like, a table, for example, that would also be in the Walker that's deployed closest to whoever is requesting the information from a table if you've written that logic as a Walker and deployed it.

And the way we, this is from, like, my friend Sunil explains Walker to me as, you know, this stretchy server.

So it's, like, connected in each node, and then it just keeps expanding the more, like, the edge, the more the service points that keep expanding, that's more rich for your users.

And this is a very reliable network that you want to leverage on.

And what does this give you? The benefits of, like, using your services, extending the existing services that you have on Walkers would be better user experience.

Because, like I said, all of this thing is happening, like, on the edge, and then you're having better user experience.

Because as soon as people try to get that information, the information comes directly to them.

There's no latency because Walkers doesn't have any cold starts.

It's always ready to go. And the client functions are even closer. So because you've sent in these functions, like, to the edge locations, they are always closer to the client.

So every time they run that information, or request that function, it's very easy to, like, get fired up.

And you can also cache on the edge.

Because I know the next thing you'll be wondering is, okay, even though that this information, or even though, like, oh, yes, I'm using Walkers, and I have, like, maybe my data coming from somewhere else, and the function is there closer to the user, but the information is still you're still sending, like, a request to a database somewhere, right?

What if that database as well is not on the edge? And, yes, I know.

It's not enough to have your function closer to the user. What about the data?

Well, we answered that question. Because Cloudflare has, like, a bunch of services, like, Walkers KV, which is our key value store that is used for, like, if people are writing infrequently, and trying to read, like, more frequently.

If you're having, like, information that you need to read more.

So what you would do usually is if you're sending out a request, and that request has come in through, like, a walker that's using KV, you can always cache that information on the edge, right?

So your user is not having to make that same request over and over again.

So once it's cached there, you don't need to start making that roundtrip over again.

You just get the information to the user as soon as they request it, because it's already cached.

And because, like, we keep trying to expand our reach, yesterday we announced a bunch of partnerships with, like, MongoDB and Prisma, and we also had partnerships with Phona as well.

You can use these services and get, like, Cloudflare's edge caching, or get, like, information using these services, and it will still be very fast.

I'm talking about databases, for example. And you can also, like, use stuff like durable objects as well.

And because, like I said, at Cloudflare, we believe in, like, dogfooding our own products.

We also use Walkers to build some of our other products, like stream images and pages, as I've mentioned.

So the pain points that you feel when using Walkers, we also feel them.

So we try to, like, optimize these things, because as we solve the problems for ourselves, we're also solving problems for you as well.

And I know you're wondering, is this a, like, Walkers talk, or is this, like, a JAMstack talk?

I'm trying to explain how, like, to optimize your front end using, like, more services that will make it faster than the JAMstack already is.

And one does not simply talk about the JAMstack without the M, which is the markup, right?

Now, the way I think about this is we have the templates, which is the static sites, frameworks, or all the frameworks that we've known to excuse me, all the frameworks that we've known to love and use, like Next, Noxt, Remix, Astro, Swelft, Kits, Swelft, like, all of these offer us some sort of, like, templates, and they all come with their own different ways of building these things, but most of them support, like, static site generation.

And the static site generation in this sense is getting your information to your users as a single file.

Anyhow, like, if you create, like, your front end and build out whatever you're building and draw information from wherever, all of this is generated at build time and served to your client as a single file, just like 1993.

A single file. So, this brings me back to what I was saying before as how people just keep going we just keep improving, like, things without anything new.

It's the same thing all over again. And because we're thinking of the markdown as, like, a single file, this has to be, like, optimized in a, like, it has to be optimized at build time.

But you'll be wondering, okay, we're not all doing static stuff.

There are some things that have to be dynamic and all of that.

And the JAMstack hears you. So, because we're always trying to improve and increase the way we optimize things.

Because another problem is, yes, it's fine when you're serving 10 pages at build time or 20 pages at build time.

But the more your pages increase, the more problems or the more complex it gets to compile these pages at all of them at build time.

It takes more time to do that. So, the JAMstack has come up with, like, a lot of, like, different frameworks have come up with a lot of different solutions to this.

Gatsby just released DSR, which is static regeneration.

Which essentially means that instead of building all the files at the build time, it defers, like, some of the files that aren't necessarily urgent and then builds the ones that are.

So, that as soon as you try to request the ones that aren't urgent, it then gets those ones for you.

And these are probably, like, less vested routes.

Astro also does some amazing things with, like, server side rendering, which allows you to just, like, make your application server side rendered.

And some frameworks like Next allows you to add code to be server side rendered.

And what this means is that this code is only gotten, like, when the client actually needs it or when they request it.

It's not it doesn't slow down your builds, but it's also essential for the for the whole experience.

And if you've taken anything out from everything I've said, we've found a way to take something that was by optimizing something that was, like, when we would always change an index file or increase or add more information to what we already had.

And we've focused on, like, building the front end.

Just the front end and then optimizing that bit. And then try to, like, outsource everything that wasn't needed at that time to third parties.

Things that we didn't want to think about. Like, we didn't want to, like, have to learn to use Express or build the middleware to access all of these things.

We can now leave all of that to the experts and we can write the code for that and watch the experts, like, scale it for us and handle all of those things.

And if you think about it, we're now, like, one step closer to world domination.

Because the more we keep improving on these services and if you're like me and you want to, like, push it a little bit further, you can also, like, try to get it as fast to your users as possible so you can have that fast expression.

And the more you keep, like, doing these things, the more you see that you're actually one step closer to world domination.

So, I want to thank you so much for listening.

And please, if you reach out to me on Twitter, if you have any questions, I'm always happy to discuss about the Jamstack.

And I'm sending you all the force and thank you very much.

and enable access app launch.

Before enabling access, you need to create an account and add a domain to Cloudflare.

If you have a Cloudflare account, sign in, navigate to the access app, and then click enable access.

For this demo, Cloudflare access is already enabled, so let's move on to the next step, configuring an identity provider.

Depending on your subscription plan, access supports integration with all major identity providers, or IDPs, that support OIDC or SAML.

To configure an IDP, click the add button in the login methods card, then select an identity provider.

For the purposes of this demo, we're going to choose Azure AD.

Follow the provider -specific setup instructions to retrieve the application ID and application secret, along with the directory ID.

Toggle support groups to on if you want to give Cloudflare access to read specific SAML attributes about the users in your tenant of Azure AD.

Enter the required fields, then click save.

If you'd like to test the configuration after saving, click the test button.

Cloudflare access policies allow you to protect an entire website or resource by defining specific users or groups to deny, allow, or ignore.

For the purposes of this demo, we're going to create a policy to protect a generic internal resource, resourceonintra.net.

To set up your policy, click create access policy.

Let's call this application internal wiki. As you can see here, policies can apply to an entire site, a specific path, apex domain, subdomain, or all subdomains using a wildcard policy.

Session duration determines the length of time an authenticated user can access your application without having to log in again.

This can range from 30 minutes to one month. Let's choose 24 hours. For the purposes of this demo, let's call the policy just me.

You can choose to allow, deny, bypass, or choose non-identity.

Non -identity policies enforce authentication flows that don't require an identity provider IDP login, such as service tokens.

You can choose to include users by an email address, emails ending in a certain domain, access groups, which are policies defined within the access app in the Cloudflare dashboard, IP ranges, so you can lock down a resource to a specific location or whitelist a location, or your existing Azure groups.

Large businesses with complex Azure groupings tend to choose this option.

For this demo, let's use an email address.

After finalizing the policy parameters, click save.

To test this policy, let's open an incognito window and navigate to the resource, resource on intra.net.

Cloudflare has inserted a login screen that forces me to authenticate.

Let's choose Azure AD, log in with the Microsoft username and password, and click sign in.

After a successful authentication, I'm directed to the resource.

This process works well for an individual resource or application, but what if you have a large number of resources or applications?

That's where Access App Launch comes in handy.

Access App Launch serves as a single dashboard for your users to view and launch their allowed applications.

Our test domain already has Access App Launch enabled, but to enable this feature, click the Create App Launch Portal button, which usually shows here.

In the Edit Access App Launch dialog that appears, select a rule type from the include drop-down list.

You have the option to include the same types of users or groups that you do when creating policies.

You also have the option to exclude or require certain users or groups by clicking these buttons.

After configuring your rule, click Save.

After saving the policy, users can access the App Launch portal at the URL listed on the Access App Launch card.

If you or your users navigate to that portal and authenticate, you'll see every application that you or your user is allowed to view based on the Cloudflare access policies you've configured.

Now, you're ready to get started with Cloudflare Access.

In this demo, you've seen how to configure an identity provider, build access policies, and enable Access App Launch.

To learn more about how Cloudflare can help you protect your users and network, visit teams .Cloudflare.com backslash access.